Amidst all the AI buzz, Microsoft Copilot has recently become a hot topic of conversation. Microsoft has rolled out Copilot across various products, including Microsoft 365, GitHub, and Dynamics 365, as well as its various security tools. The excitement is real, and for a good reason. Whether it’s for drafting emails, extracting data from your document repository, or troubleshooting your security incident, Copilot has everyone eager to deploy it within their organisations.

But here’s the critical question: Do you have the necessary security and governance measures in place before implementing Copilot? Why are they even needed? What happens without it? Let’s delve into it in this blog post.

Why do you need to secure Copilot?

Copilot for Microsoft 365 accesses content and context through Microsoft Graph. It generates responses based on your organisational data, including SharePoint documents, Teams chats, emails, calendars, meetings, and contacts. And there lies the security challenge.

What if anyone can prompt and retrieve sensitive information using Copilot?

Imagine granting Copilot unrestricted access to your organisation’s data. Suddenly, anyone can query confidential salary data, health records (if you’re in the healthcare industry), financial records, and more.

Or picture someone retrieving their saved passwords in a Word document using Copilot. Or worse, an individual with malicious intent using Copilot to threaten or abuse coworkers. How can you prevent such scenarios?

The risk of deploying Copilot without proper guardrails becomes evident here—everyone gains access to everything, which isn’t ideal.

So, what’s the solution? How do you implement the right guardrails so everyone in your organisation can access Copilot in a safe space? Let’s classify Copilot guardrails into 3 pillars.

1) Protect sensitive data

To protect your data, you must know where your information is, what information is stored, and who has access to it.

Let’s start with SharePoint—a platform where many sites exist but are not frequently updated. Over time, new team members join, while others leave or move within the organisation. Old SharePoint sites often retain access for these individuals. So, initiate a review to understand who has access to what and where.

Not just SharePoint, but review your Teams channels, OneDrive, or any other application that’s used for document storage and review its privacy labels. Ensure there are rules in place for teams or sites that are no longer used.

Make sure public sites that need to be made private are restricted and intentionally exclude certain SharePoint sites from Copilot. This can be done with sensitivity labels, indexing or new restricted search functionality in SharePoint.

2) Manage insider risk

Once you understand where the data is stored, the next step is to analyse the type of information stored and understand who has access to it. For example, does anyone have unauthorised access to confidential financial data?

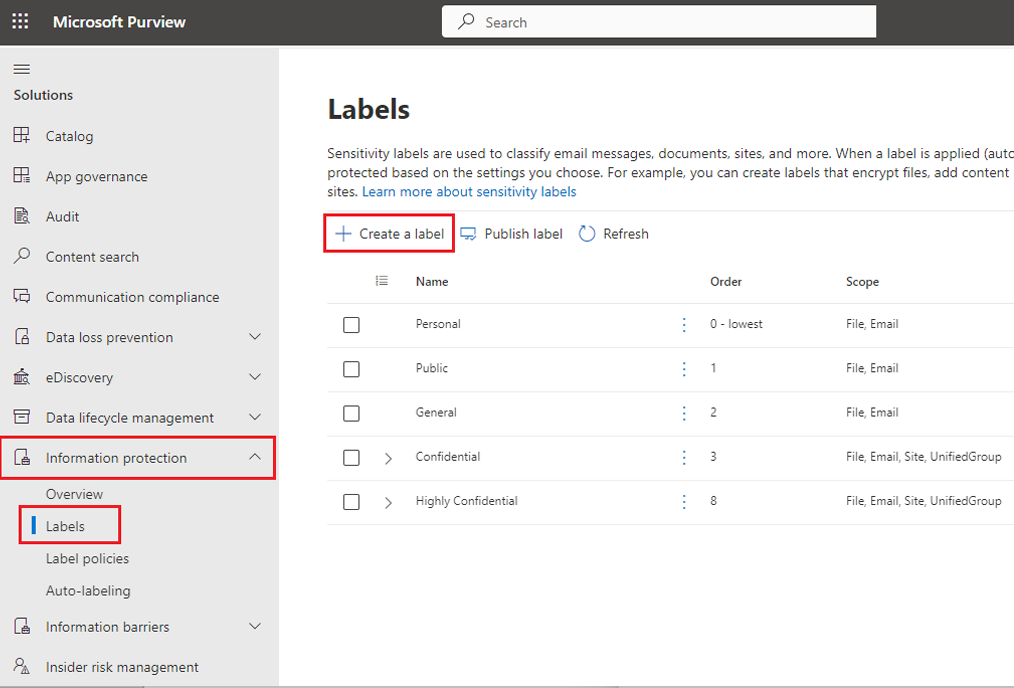

Microsoft Purview will give you good visibility of all your data assets across your organisation, enabling you to restrict access effectively. Using Sensitive Information Types (SIT) in Purview, classify the data accessible to users. Then, start limiting access and lock it down according to job role and department.

For example, should our users in the managed services team have access to commercial data or vice versa? This way, you create barriers to stop users from accessing things they shouldn’t and restrict what they can query and retrieve using Copilot.

Remember that certain data—such as financial, health, and GDPR-related information—shouldn’t be accessible to everyone. Apply labels to ensure these sensitive data types remain unsearchable within Copilot.

We also suggest you use Compliance Manager in Purview and monitor your score from day 1, with or without Copilot. Compliance Manager gives you an overall score based on your adherence to key regulations, standards for data protection and general data governance. Actively monitor this score and take the recommended actions suggested by Purview to improve your organisation’s overall security posture.

Next is to control the type of prompts used in Copilot and the information retrieved.

For example, prevent searches for malicious intent terms like racial abuse, threats, or violent prompts. Microsoft Purview offers out-of-the-box labels that automatically restrict such terms. You can also add your custom labels to make sure Copilot remains a safe space for users.

When enabling Copilot in your organisation, consider implementing a communication policy that records all user prompts. Within the policy, define sensitive information types—key phrases like board members, salary information, and other critical data. If prompts trigger these sensitive terms, generate alerts for your internal security teams and senior managers. This layer of governance plays a crucial role in identifying any malicious Copilot usage.

3) Prevent data loss

Lastly, data loss prevention policies should be created and deployed for SharePoint, Exchange, Onedrive, etc., to monitor what data is being transferred, accessed, and sent outside the organisation.

To break this down further, individual policies should be created to alert when certain Sensitive Information Types (SITs) are exfiltrated to listed third party applications (such as Dropbox) or if information being transferred is over a certain size defined by your organisation.

These alerts can be fed directly to your security teams or managed services provider to manage and prevent your sensitive data ending up in the wrong location, and therefore accessible by AI tools such as Copilot.

In summary, protect your information by applying the right sensitivity labels, conducting access reviews, and auditing usage to ensure Copilot is accessed within a secure, governed environment.

To learn more about Copilot, join us for our Copilot Unlocked webinar series, where we will dive deep into Copilot guardrails, best practices, data governance principles, Copilot Studio and more. Sign up for the webinars and secure your seat today.